The ML reading list #1

Infinite context lengths, evolutional model merging, and more

The ML reading list

Published: Sat, 04 May 2024

Introduction

I read a lot of interesting articles and papers on ML and often come across some very interesting ML libraries. It'd be a shame to hoard them all to myself, so I thought why not share them in the downtime between bytes that take a while to make (such as the one I'm working on right now).

My recommendations

This year, there has been a lot of focus on improving context-length with models like Claude 3 and Mamba. Here's a few papers on infinite context length models that I found interesting and could lay the foundations for some incredible models in the future:

- Mamba - This paper is certainly worth a read if you're looking to broaden your horizons. While the Mamba architecture has reportedly shown subpar performance compared to transformers, it is still a fascinating architecture that might be able to provide some significant advantages over transformers. I found the memory-level optimisations particularly interesting as the authors implement similar optimisations to flash attention (which was authored by one of the Mamba authors) but for state space models.

- Leave No Context Behind - Google took a stab at an infinite context window with it's infini-attention transformer. While it is still just a prototype, something like this could be the future of transformers.

- Megalodon - Not to be outdone, Meta AI also published an infinite context window transformer, just with a much cooler name. This architecture has the same purpose and infini-attention, but does it quite differently. It's hard to do a direct comparison of the two without more benchmarking though.

It wasn't just infinite context models that piqued my interest recently. Here's a few things that might interest you:

- Statistics Vs Machine Learning: The Two Worlds - I always thought of machine learning as just a subset of statistics, but this thought-provoking article completely challenged my perspective on the two fields. The article goes into the differing schools of thought held by statistics and machine learning, which was very eye-opening to me.

- Evolutionary model merging - Sakana AI's blog post on their new technique for merging models together. I had never considered merging models, but the potential of the ability to merge models with expertise in differing domains is endless. This is definitely worth a read.

- WebLLM - An LLM framework that takes advantage of the experimental WebGPU API available in most modern browsers that lets web developers use the client's GPU. This allows models like llama 2 and mistral 7B to run completely client-side in the browser. It's a very neat library that I hope to find a use for in the future.

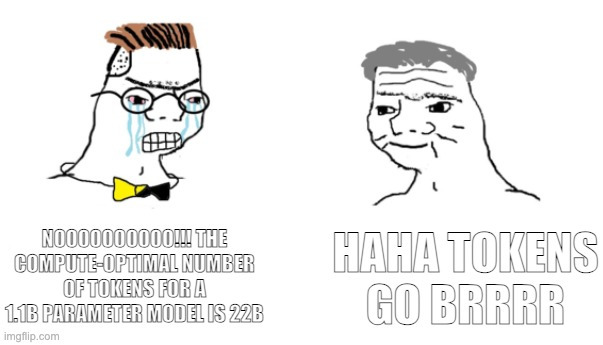

- TinyLlama - The creators of this model had one thought: "What if Chinchilla was wrong?". The TinyLlama model is 1.1B parameters, so the compute-optimal training would consist of 22B tokens according to Chinchilla. The researchers trained the model on 3T tokens instead. Since this model's release, Besiroglu et al. (2024) academically bitch-slapped the Chinchilla authors with their statement of "the reported estimates are inconsistent with their first two estimation methods", so the TinyLlama team might have been right in their distrust of the chinchilla scaling laws.

What I imagine the TinyLlama team is like.